Virtual reality (VR)has seen many recent gains in popularity, and as headset makers work to keep up with hardware demand, developers are working to keep up with users’ need for engaging content. VR isn’t the only technology that’s seen an increase in popularity. In today’s professional world everyone is using live video streaming to connect and collaborate. This creates an interesting opportunity for developers to develop applications that can leverage Virtual Reality along with video streaming to remove all barriers of distance and create an immersive telepresence experience.

VR developers face two unique problems:

- How do you make VR more inclusive and allow users to share their POV with people who aren’t using VR headsets?

- How do you bring non-VR participants into a VR environment?

Most VR headsets allow the user to mirror the user’s POV to a nearby screen using a casting technology (Miracast or Chromecast). This creates a limitation of requiring one physical screen per VR headset, and the headset and screen must be in the same room. This type of streaming feels very old-school given that most users today expect to have the freedom to stream video to others whom are remotely located.

In this guide, I’m going to walk through building a Virtual Reality application that allows users to live stream their VR perspective. We’ll also add the ability to have non-VR users live stream themselves into the virtual environment using their web browser.

We’ll build this entire project from within the Unity Editor without writing any code

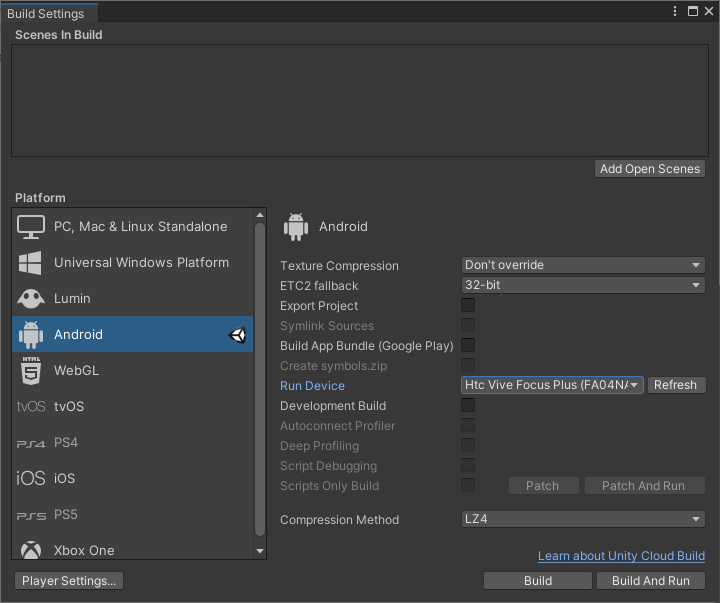

For this project, I will use an HTC Vive Focus Plus because it allows me to build using Unity’s XR framework, which makes it relatively easy to setup a VR environment. And Vive builds to an Android target, so I can use the Agora Video for Unity SDK to add live video streaming to the experience.

Project Overview

This project will consist of three parts. The first part will walk through how to set up the project, implementing the Vive packages along with the Agora SDK and Virtual Camera prefab.

The second part will walk through creating the scene, including setting up the XR Rig with controllers, adding the 3D environment, creating the UI, and implementing the Agora Virtual Camera prefab.

The third section will show how to use a live streaming web app to test the video streaming between VR and non-VR users.

Part 1: Build a Unity XR app

Part 2: Create the Scene

- Set up 3D Environment & XR Camera Rig

- Add the XR Controllers

- Add Perspective Camera & Render Texture

- Add the UI Buttons and Remote Video

- Implement Agora Prefab

Part 3: Testing VR to Web Streams

Prerequisites

- Unity Editor

- VR headset compatible with Unity’s XR Framework

(I’m using the HTC Vive Focus Plus) - An understanding of the Unity Editor and Game Objects

- Basic understanding of Unity’s XR Framework

- An Agora developer account (see How to Get Started with Agora)

- An understanding of HTML, CSS, and JS (minimal knowledge needed)

- A simple web server (I like to use Live Server)

Note: While no Unity or web development knowledge is needed to follow along, certain basic concepts from the prerequisites won’t be explained in detail.

Part 1: Build a Unity XR app

The first part of the project will build a Unity app using the XR Framework, walking through how to add the Vive registry to the project, download and install the Vive plug-ins, install the Agora Video for Unity Plug-in, and implement the Agora plug-in using a drag-and-drop prefab.

Set Up the Unity Project

Start by creating a Unity project using the 3D Template. For this demo, I’m using Unity 2019.4.18f1 LTS. If you wish to use Unity 2020, then you will need to use the Wave 4.0 SDK, which is currently in beta.

Note: At the time of writing, I had access to the beta SDK for the new HTC headset. I chose to use the Wave 3.0 SDK. This project can be set up in exactly the same way using the Wave 4.0 SDK.

Enable the XR Framework and HTC Modules

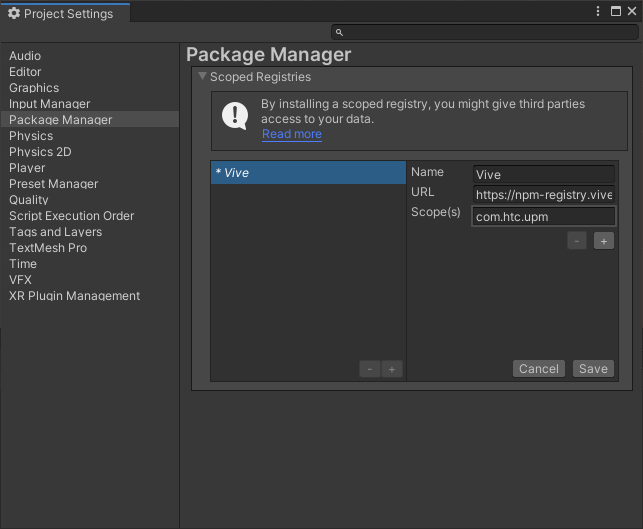

Once the new project has loaded in Unity, open the Project Settings and navigate to the Package Manager tab. In the Scoped Registries list, click the plus sign and add the Vive registry details.

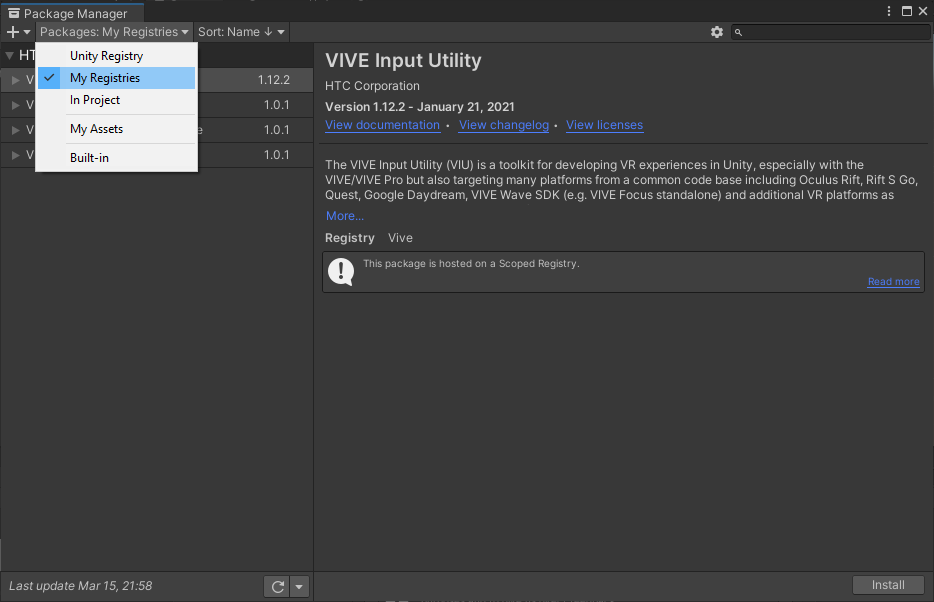

Once the Vive “scopedRegistries” object and its keys have been added, you’ll see the various loaders importing the files. Next open Window > Package Manager and select Packages: My Registries.You will see the VIVE Wave. If no package is shown, click Refresh at the bottom-left corner.

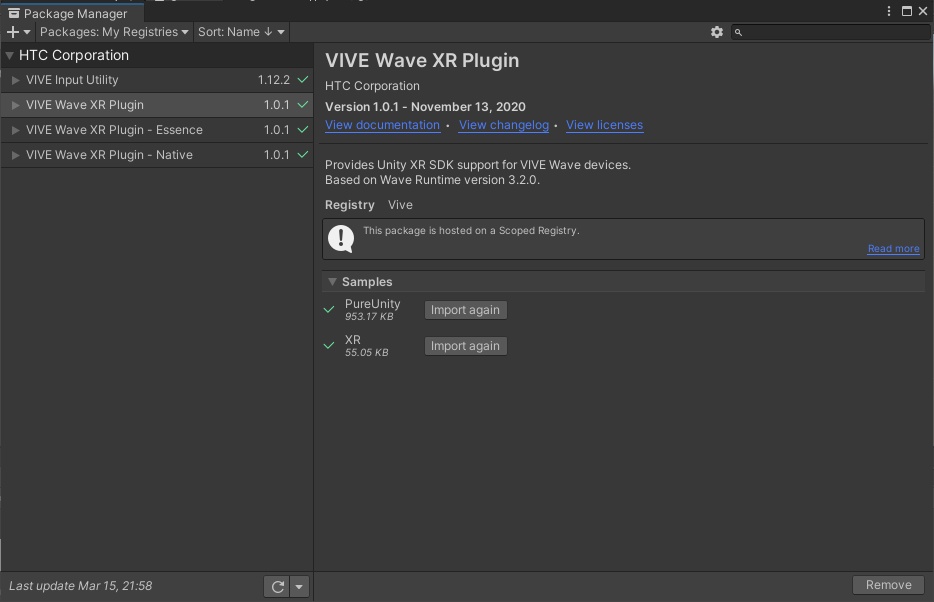

Click through and install each of the Vive packages. Once the packages finish installing, import the PureUnity and XR samples from the Vive Wave XR Plug-in and the Samples from the Essense Wave XR Plug-in into the project.

After the packages have finished installing and the sample has finished importing, the WaveXRPlayerSettingsConfigDialog window will appear. HTC recommends to Accept All to apply the recommended Player Settings.

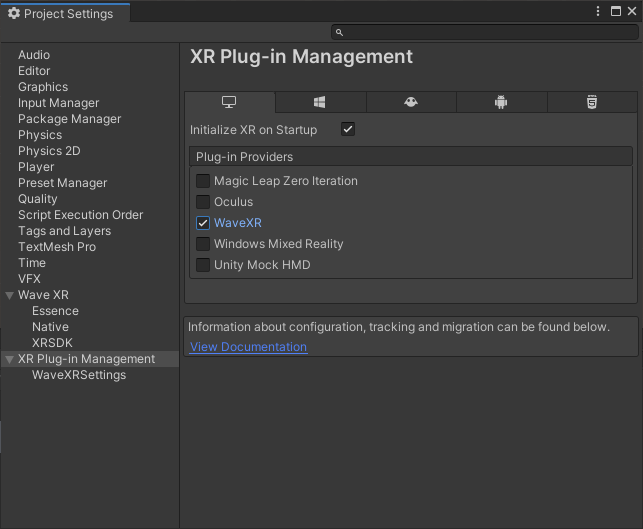

Next, open the Project Settings, click in the XR Plug-in Management section, and make sure Wave XR is selected.

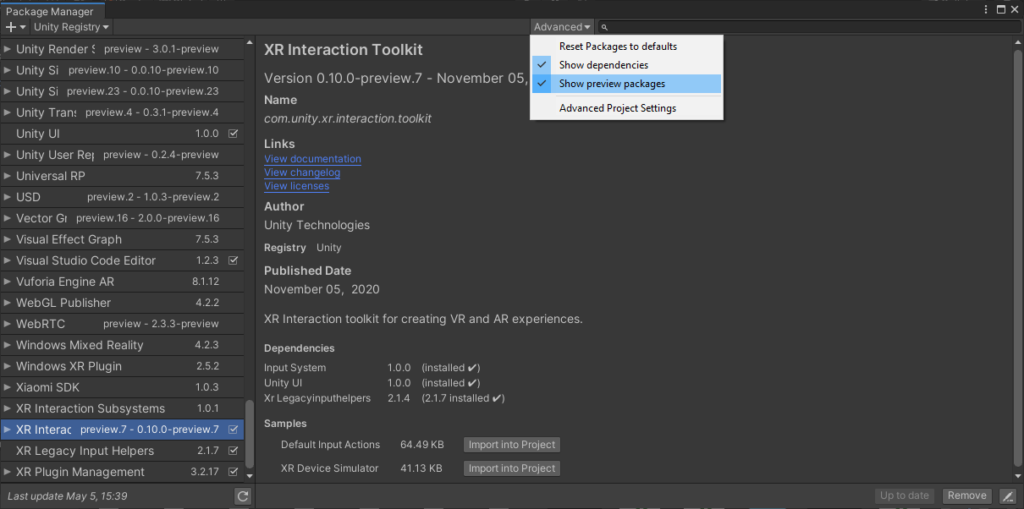

Now that we have the Wave SDK configured, we need to add Unity’s XR Interaction Toolkit package. This will allow us to use Unity’s XR components for making it possible to interact with Unity inputs or other elements in the scene. In the Package Manager, click the Advanced button (to the left of the search input) and enable the option to “Show preview packages”. Once the preview packages are visible, scroll down to the XR Interaction Toolkit and click install.

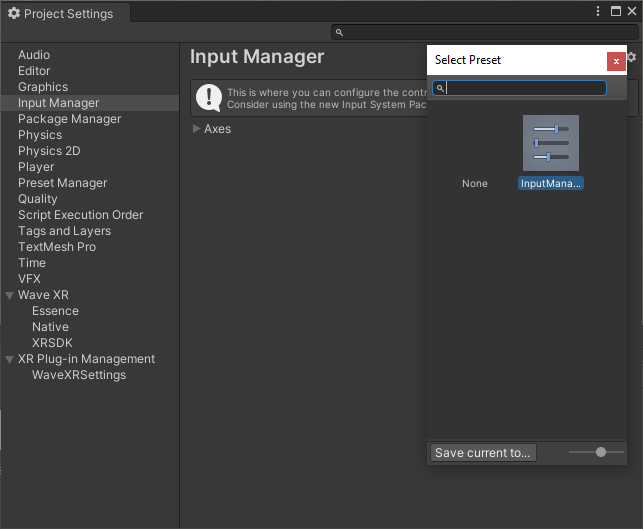

Note: If you are using Unity 2018, you will need to configure the input Manager using the presets provided by HTC. The inputs can be defined manually, or you can download this InputManager preset. Once you've downloaded the preset, drag it into your Unity Assets, navigate to the InputManager tab in the Project Settings, and apply the presets.

When working with the Wave XR Plug-in, you can change the quality level by using QualitySettings.SetQualityLevel. HTC recommends setting the Anti Aliasing levels to 4x Multi Sampling in all quality levels. You can download this QualitySettings preset from HTC’s Samples documentation page.

For more information about Input and Quality Settings, see the HTC Wave Documentation.

Download the Agora Video SDK and Prefab

Open the Unity Asset store, navigate to the Agora Video SDK for Unity page, and download the plug-in. Once the plug-in is downloaded, import it into your project.

Note: If you are using Unity 2020, the Asset store is accessible through the web browser. Once you import the asset into Unity, it will import through the Package Manager UI.

The last step in the setup process is to download the Agora Virtual Camera Prefab package and import it into the project.

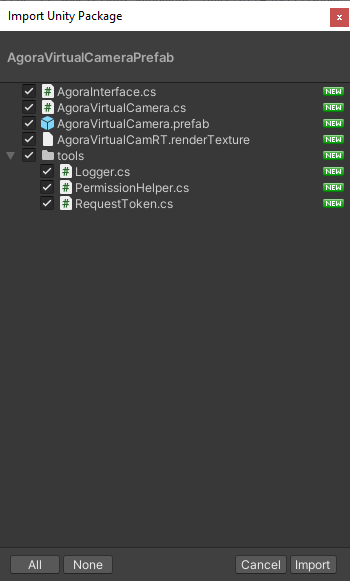

When you import the Agora Virtual Camera Prefab package you’ll see that it contains a few scripts, a prefab, and a renderTexture. The two main scripts to note are AgoraInterface.cs (which contains a basic implementation of the Agora Video SDK) and AgoraVirtualCamera.cs (which implements and extends the AgoraInterface specifically to handle the virtual camera stream). The tools folder contains a few helper scripts: one for logging, another to request camera/mic permissions, and one to handle token requests.

Also included in the package is the AgoraVirtualCamera.prefab, an empty GameObject with the AgoraVirtualCamera.cs attached to it. This will make it easy for us to configure the Agora settings directly from the Unity Editor without having to write any code. The last file in the list is the AgoraVirtualCamRT.renderTexture, which we’ll use for rendering the virtual camera stream. The tools folder contains the scripts Logger.cs, PermissionHelper.cs, and RequetstToken.cs.

We are now done with installing all of our dependencies and can move on to building our XR video streaming app.

Part 2: Create the Scene

In this section, we will walk through how to set up our 3D environment using a 3D model, create an XR Camera Rig Game Object, create the buttons and other UI elements, and implement the Agora VR Prefab.

Set Up the 3D Environment and the XR Camera Rig

Create a scene or open the sample scene and import the environment model. You can download the model below from Sketchfab.

3D model hosted on Sketchfab: https://skfb.ly/onQoO

Once the model is imported into the project, drag it into the Scene Hierarchy. This will scale and place the model at (0,0,0) with the correct orientation.

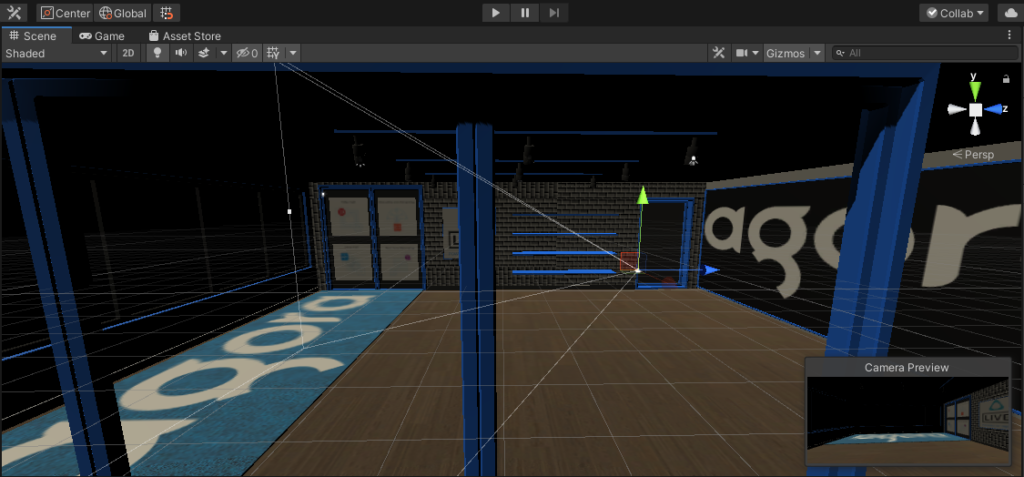

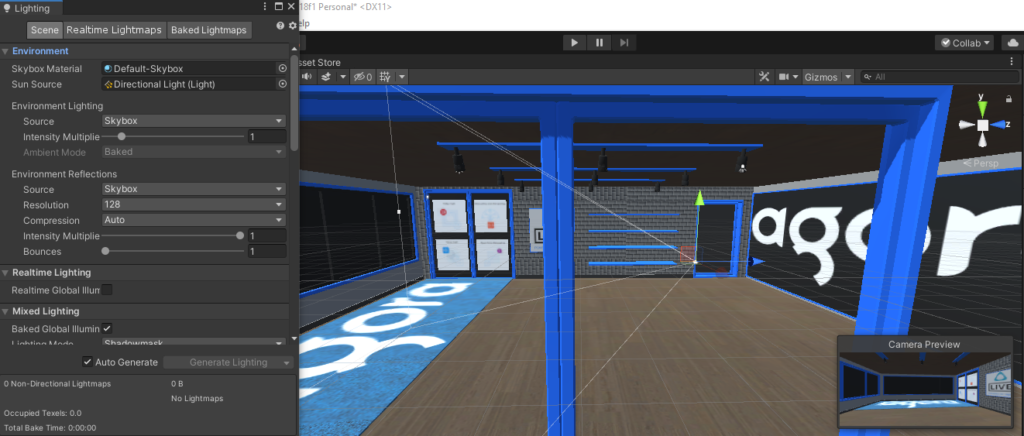

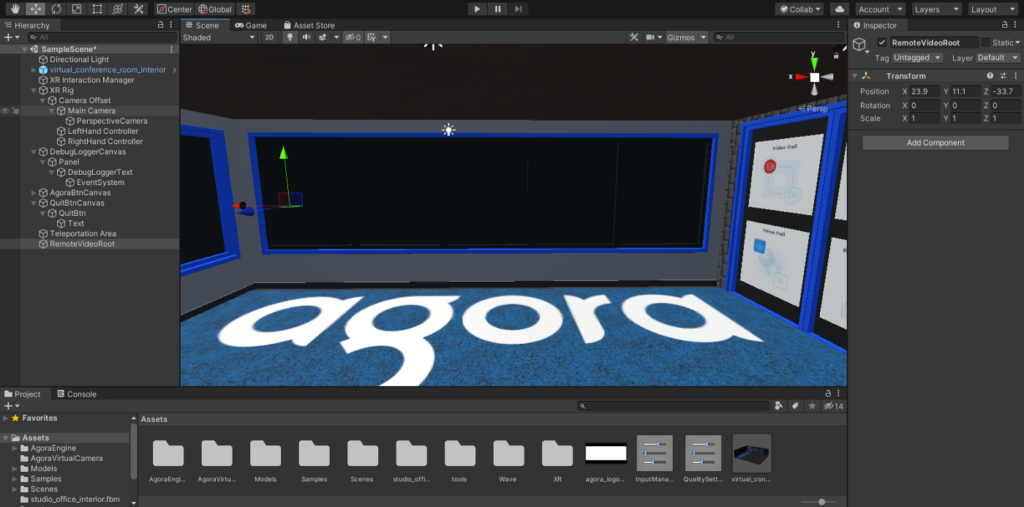

You may have noticed that the scene looks really dark. Let’s fix that by generating lighting. Select Window > Rendering > Lighting Settings and then select the Auto Generate option at the bottom of the window and watch as Unity adjusts and updates the Lighting settings. The lit scene should look like this:

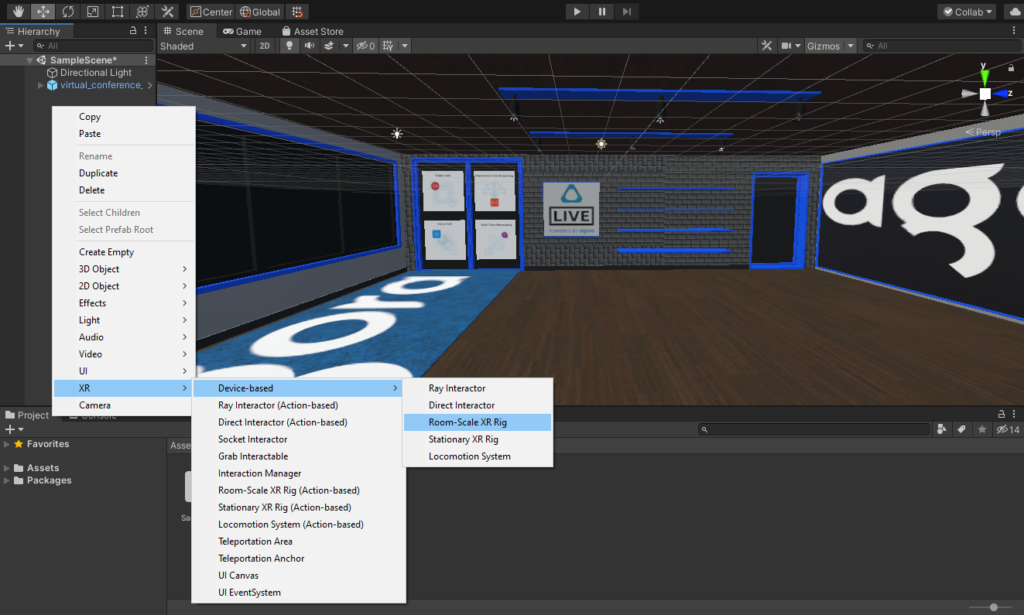

Next we’ll add a room scale XR rig to the scene. Start by deleting the Main Camera Game Object from the scene, and then right-click in the Scene Hierarchy, navigate to the XR options, and click “Device-based > Room Scale XR Rig)”.

Add the XR Controllers

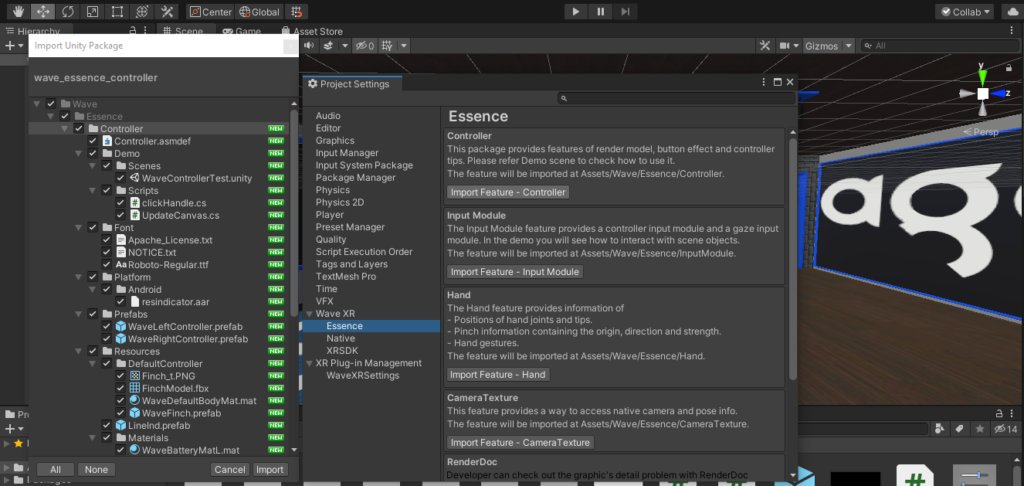

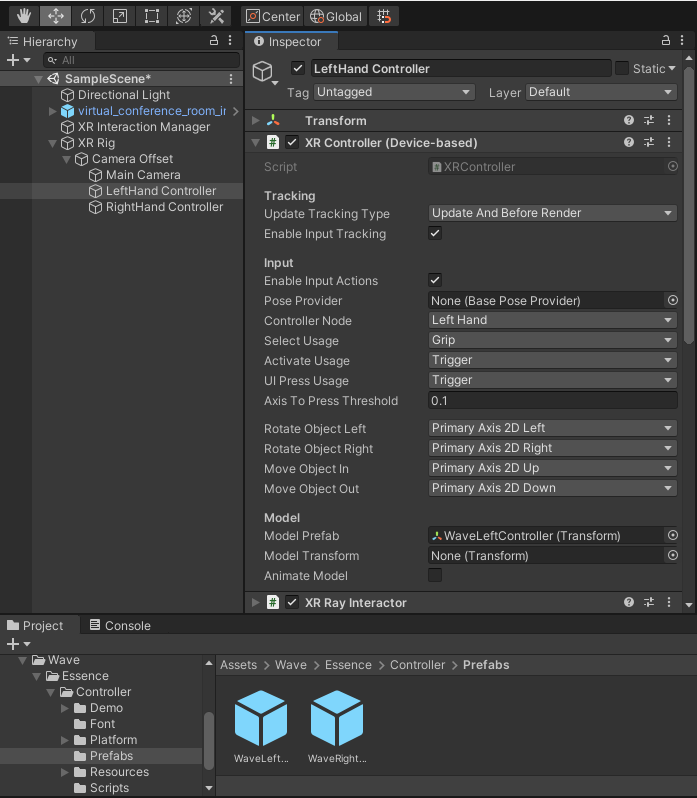

The last step for setting up our XR Rig is to set the HTC prefabs for the LeftHand Controller and RightHand Controller Game Objects. To do this, we’ll need to import the HTC Essence Controller package. Open the Project Settings, navigate to the Wave XR > Essence tab, and import the “Controller” package.

Once Unity has finished importing the “Controller” package, in the Assets panel navigate to the Wave > Essence > Prefabs folder. The Prefabs folder contains prefabs for the Left and Right Controllers.

Select the LeftController Game Object from the Scene Hierarchy, and drag the WaveLeftController prefab into the Model Prefab input for the “XR Controller (Device Based)” script that is attached to the Game Object.

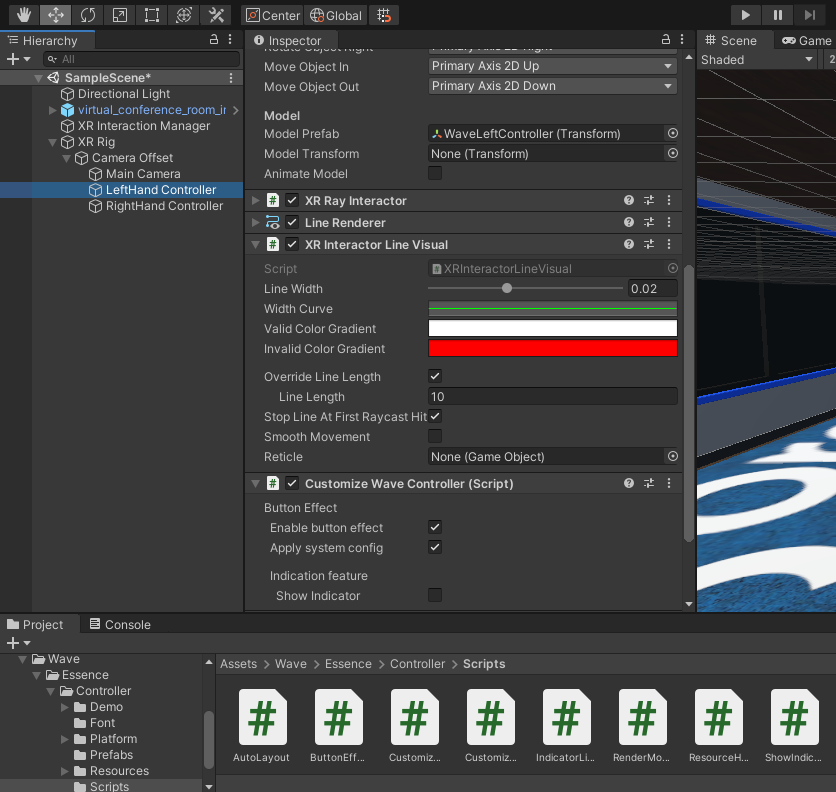

Next, navigate to the Wave > Essence > Scripts folder and drag the CustomizeWaveController script onto the LeftController Game Object.

Repeat these steps for the RightController Game Object, adding the WaveRightController prefab and the CustomizeWaveController script.

That’s it for setting up the controllers to automatically show the appropriate 3D model for the controllers associated with the HMD and the controllers the end user is running.

One thing to keep in mind: Since this is VR, there will be a desire to add some 3D models for the user to interact with. If you plan to add XR Grab Interactable models to the scene, add 3D cubes with collider boxes just below and whatever stationary or solid Game Objects you’d have in your virtual environment, to avoid the XR Grab Interactable models falling through these 3D environment objects when the scene loads. Later in this guides, we’ll add a floor with a collider and teleportation system.

Note: XR Grab Interactable concepts are beyond the scope of this guide, so I won’t go into detail, but for more information take a look at the Unity Guide on using interactables with the XR Interaction toolkit.

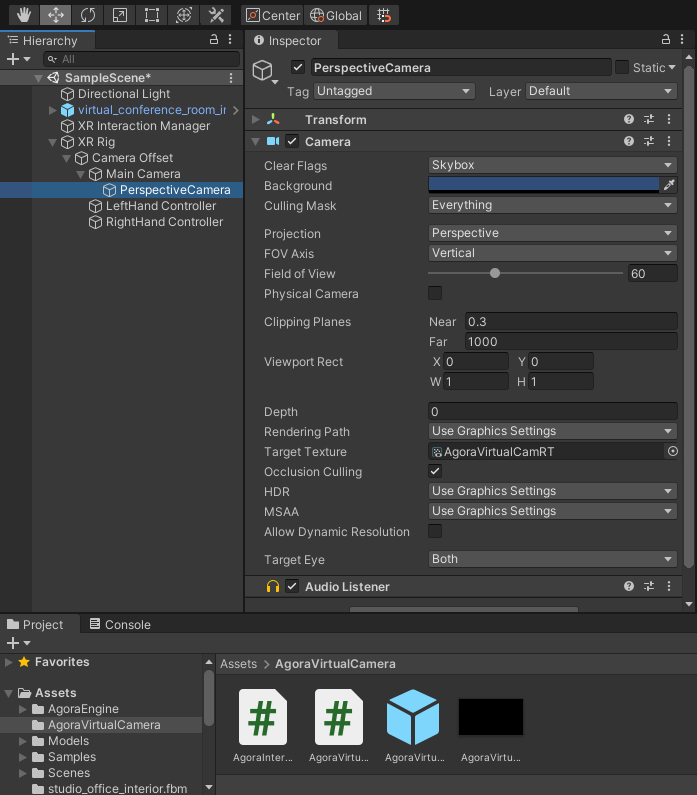

Add the Perspective Camera and the Render Texture

The final modification we will make to our XR Rig is to add a Camera Game Object with a Render Texture, as a child of the Main Camera. This will allow us to stream the VR user’s perspective into the channel. For this demo, I named the Camera Game Object as PerspectiveCamera and added the AgoraVirtualCamRT (from the Agora Virtual Camera package) as the Camera’s Render Texture.

Add the UI Buttons and Remote Video

Now that the XR Rig is set up and the scene is properly lit, we are ready to start adding our UI elements.

We need to add a few different UI game objects: a join button, a leave button, a button to toggle the mic, a button to quit the app, and a text box to display our console logs in the Virtual Reality scene.

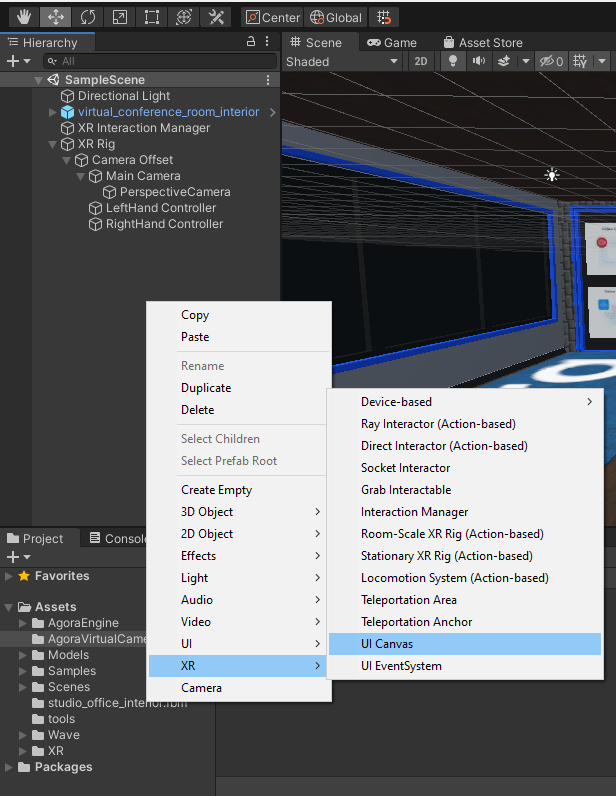

Untiy’s XR Interaction framework has XR-enabled UICanvas Game Objects that we can use to quickly place our buttons. Right click in the Scene Hierarchy, and in XR select the UI Canvas.

We’ll repeat this process two more times so at the end there will be three canvas Game Objects in our scene.

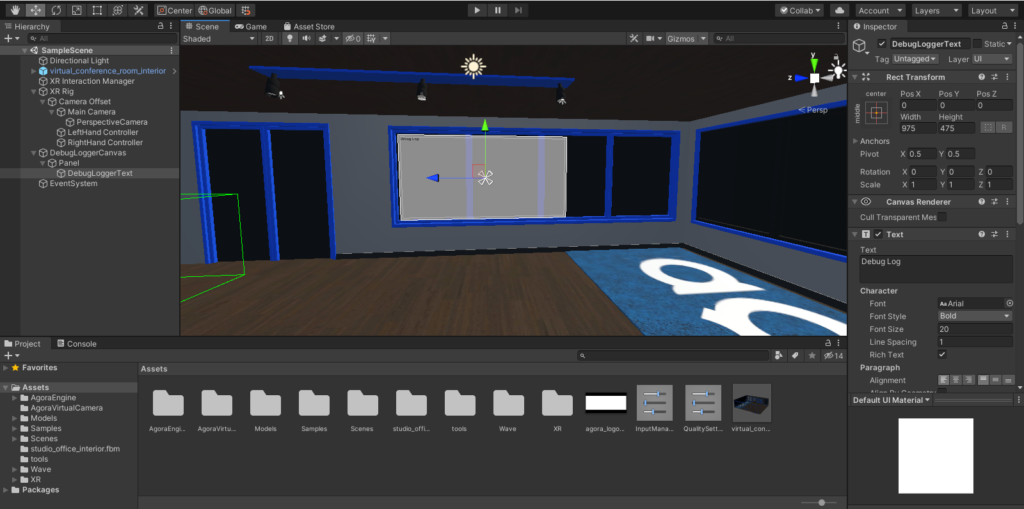

The first canvas will be used for our console logs. We can rename this Game Object to DebugLoggerCanvas. Then set the width to 1000, the height to 500, and scale to (0.025,0.025,0.025) so it fits within our 3D environment. Add a Panel Game Object as a child of the DebugLoggerText, and then add a Text Game Object as a child of the Panel, and name it DebugLoggerText. The DebugLoggerText will display the output from the logger. We want the logs to be legible, so we’ll set DebugLoggerText width to 975 and height to 475. Last, position the DebugLoggerCanvas in the scene. I’ve chosen to place it on the wall with the four window panes.

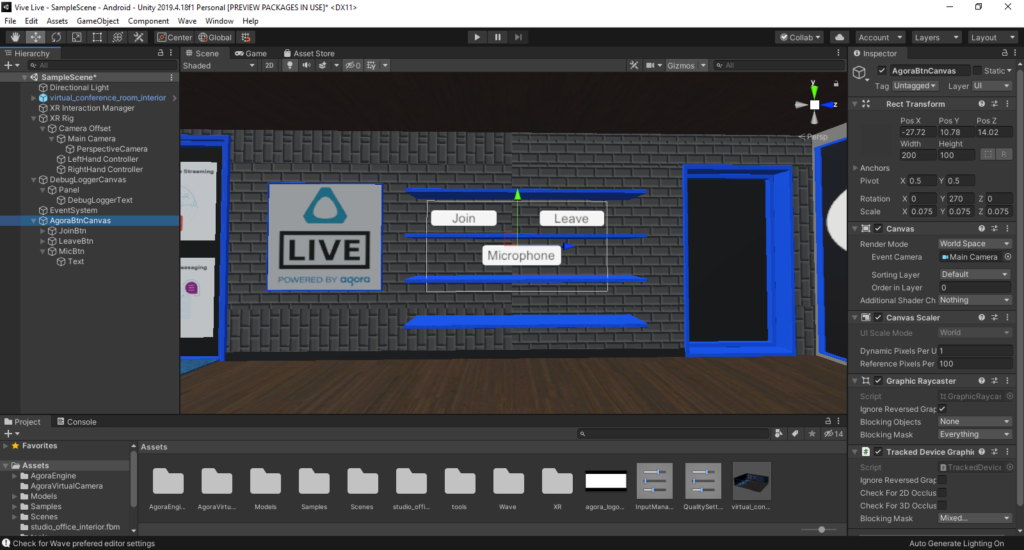

The next UI Canvas will contain the Join, Leave, and Microphone buttons. Right-click with in the Scene Hierarchy, add another XR UI Canvas to the scene, rename it AgoraBtrnCanvas, set the width to 200, the height to 100, and the scale to (0.075,0.075,0.075). Add three Button Game Objects as children of the AgoraBtnCanvas. Scale and position the buttons in the canvas however you want. Below is how I positioned the buttons on the shelves:

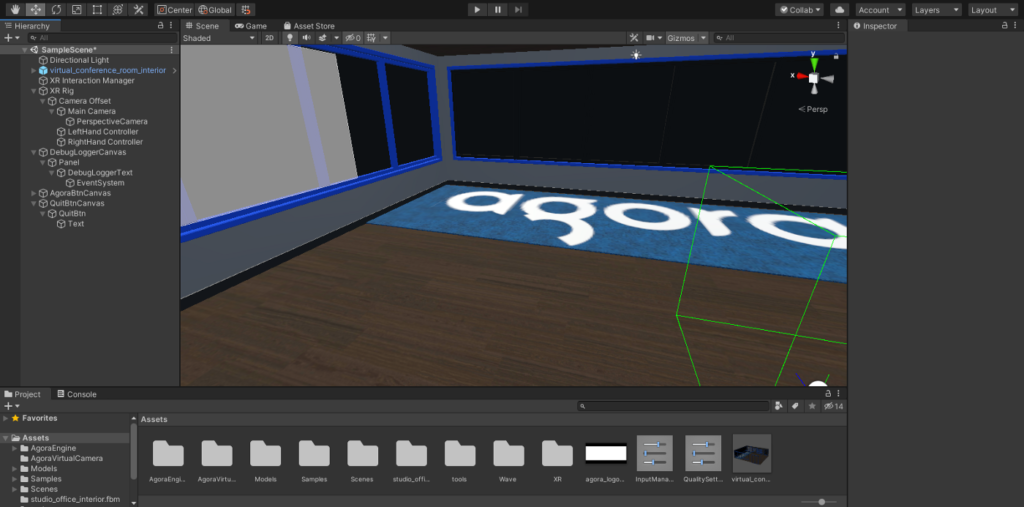

The third UI Canvas will contain the Quit button to allow the users to exit our application. Right-click in the Scene Hierarchy, add another XR UI Canvas to the scene, rename it AgoraBtrnCanvas, set the width to 100, the height to 50, and the scale to (0.075,0.075,0.075). Add a Button Game Object as a child of the XR UI Canvas. Scale and position the button in the canvas however you want. Below is how I positioned the button:

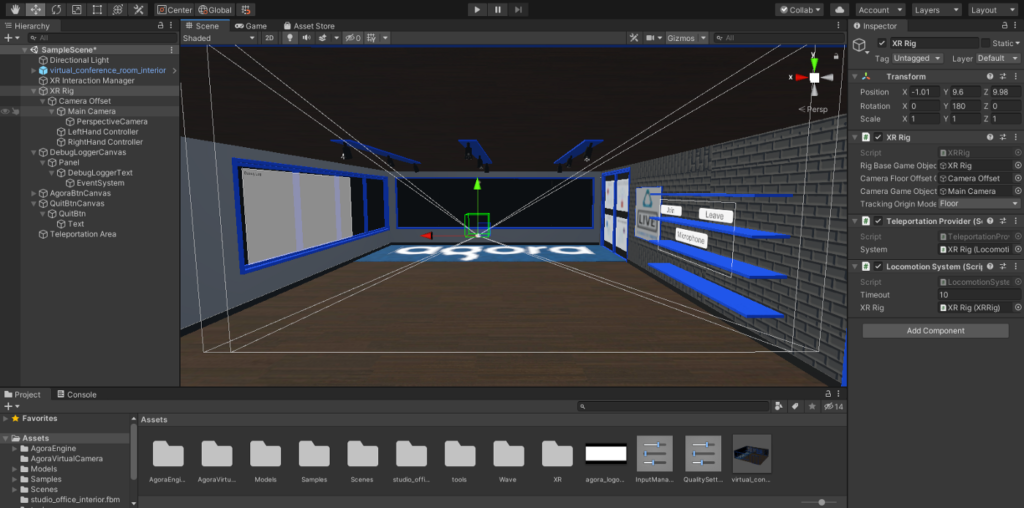

This is a pretty big space, so let’s add a teleportation system so the user can move around in the virtual space. To do this, select the XR Rig in the Scene Hierarchy and add the Teleportation Provider script and the Locomotion System script to the Game Object. Make sure to set the script references to the XR Rig. While it’s not required, setting the reference helps reduce the load time. The last component to enabling teleportation is to add a teleportation area and scale it as large as the floor. The teleportation system allows the user to point and click to move anywhere on the floor plane.

The last two Game Objects we need to add to the scene are the Remote Video Root, and the Screen Video Root. Add an empty Game Object and rename it RemoteVideoRoot. This will be the root node to which new video planes will spawn as remote users connect to the channel. I’ve chosen to place the RemoteVideoRoot on the wall of just windows.

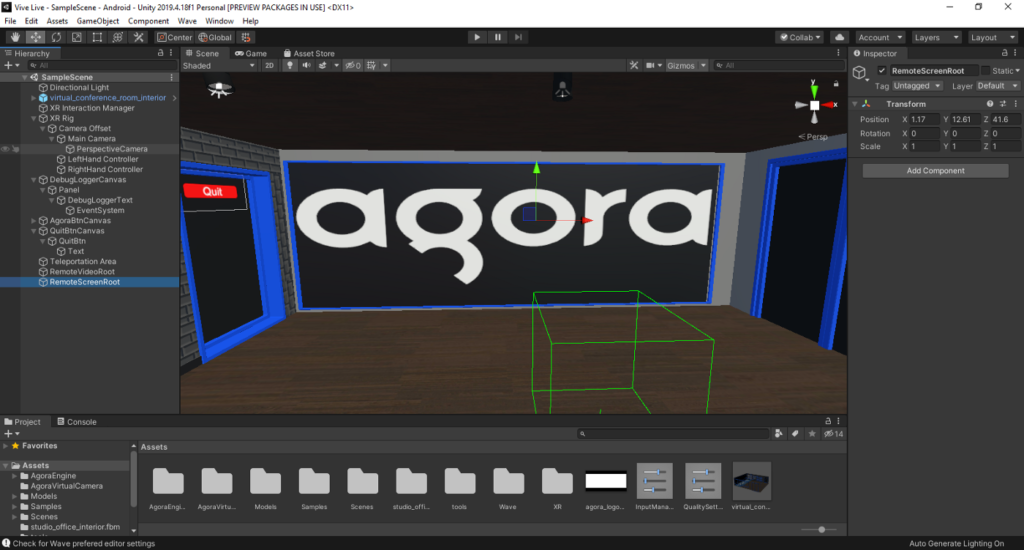

Last, add an empty Game Object and rename it RemoteScreenRoot. This will be the root node to which the video plane will spawn whenever the screen is shared into the channel. I’ve chosen to place the RemoteScreenRoot on the glass wall opposite the RemoteVideoRoot.

Implement the Agora Prefab

Now that we have all of our environment and UI elements added to the scene, we are ready to drag the Agora Virtual Camera Prefab onto the Scene Hierarchy.

First, we’ll add our Agora AppID and either a temp token or the URL for a token server. The Agora prefab assumes the token server returns the same JSON response as the Agora Token Server in Golang guide.

We also need to select a channel name. For this example I will use AGORAVIVE. It’s worth noting that for later when we connect from the web.

Note: For users to be correctly matched, the AppID and Channel name must match exactly because channel names within Agora’s platform are case-sensitive.

The next steps are as simple as they sound. Drag the various Game Objects that we just created into the Prefab input fields.

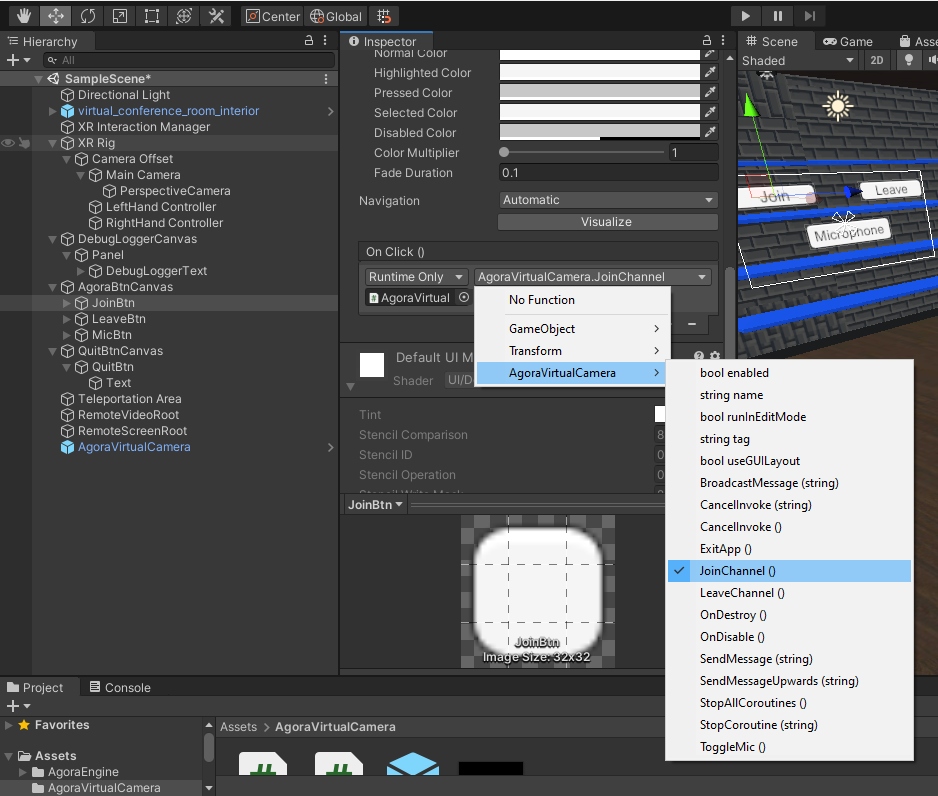

There is one last step before we can test what we’ve built. Add an On Click handler to the Join, Leave, Microphone, and Quit buttons. For each of these, we’ll use the Agora Virtual Camera prefab as the On Click reference and select the corresponding function for each button. For example, the JoinBtn On Click would call AgoraVirtualCamera > JoinChannel.

At this point, we can test the work we’ve done by building the project to our VR HMD. If you are using a Vive headset, the build settings should look like this:

After you initiate the build, the project will take a few minutes to build and deploy to the device. Once the app is deployed, you’ll see a loader screen before you are dropped into the virtual environment.

Click the Join button to join the channel. Once you have joined the channel, the microphone button will turn green and some logs will appear in the debug panel. When you click the Mic button it will turn red to indicate that the mic is muted.

Part 3: Testing VR to Web Streams

In the last part of this guide we’ll use a live streaming web app to test the video streaming between VR and non-VR users.

Set Up a Basic Live Streaming Web App

The Agora platform makes it easy for developers to build a live video streaming application on many different platforms. Using the Agora Web SDK, we can build a group video streaming web app that allows users to join as either active participants or passive audience members. Active participants broadcast their camera stream into the channel and are visible in the VR scene. Users can also join as passive audience members and view the video streams from the VR headset along with the streams of the active participants.

In the interest of brevity, I won’t dive into the minutiae of building a live streaming web app in this guide, but you can check out my guide How To: Build a Live Broadcasting Web App.

For this example, we’ll use a prebuilt example similar to the web app built in my guide How To: Build a Live Broadcasting Web App. If you are interested in running the web client locally or customizing the code, you can download the source code.

Test the Streams Between VR and the Web

Use the links below to join as either an active or a passive participant.

Once you’ve launched the web view and connected to the channel, launch the VR app and click the Join button. Once the VR headset joins the channel, you will be able to see the VR user’s perspective in the web browser.

Done!

Wow, that was intense! Thanks for following and coding along with me. Below is a link to the completed project. Feel free to fork and make pull requests with any feature enhancements. Now it’s your turn to take this knowledge and go build something cool.

Other Resources

For more information about the Agora Video SDK, see the Agora Video Web SDK API Reference or Agora Unity SDK API Reference. For more information about the Agora RTM SDK, see the Agora RTM Web SDK API Reference.

For more information about the Vive Wave SDK, see the Vive Wave Unity Reference.

I also invite you to join the Agora Developer Slack community.