The Story of Team unSting: Why & How

During AngelHack Bangalore this year, our team came up with an idea that would allow us to give back to our community which was devastated by the Chennai floods in 2016. Schools were canceled for a long time because they were used for housing those who had lost their homes. Our teachers, who helped the relief effort, told us stories of countless people who struggled due to the disaster.

When we listened to the speakers at AngelHack, all of these memories came back to us and our thoughts aligned. We were inspired to come up with a solution that would aid relief efforts during natural disasters.

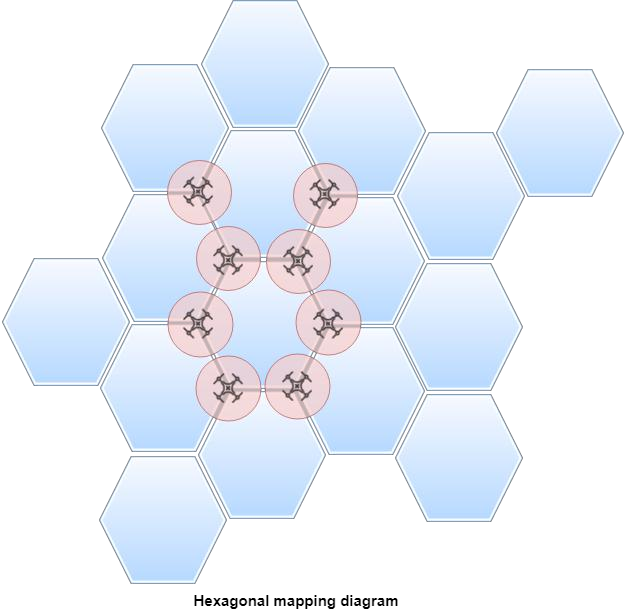

The basic idea consists of deploying several autonomous drones simultaneously. They move together to form a hexagonal grid structure, which allows the drones to maximize area while using the minimum number of drones possible. The drones then use several sensor readings, like those from the camera and heat sensors, to detect pockets of people.

Since we came up with the solution at the time of the hack we didn’t have actual drones to use, so we did two things to overcome this hurdle:

- Made a simulation using Unity that shows how the hexagonal mapping and aerial movement would actually look when it is deployed on a drone.

- Used Android Things to run the drone’s algorithms. Since all Android applications can be deployed into Android Things, we used our phones as simulators for the drones.

Now that we’ve explained how the drones work, let’s dive a little deeper into what makes our platform really special.

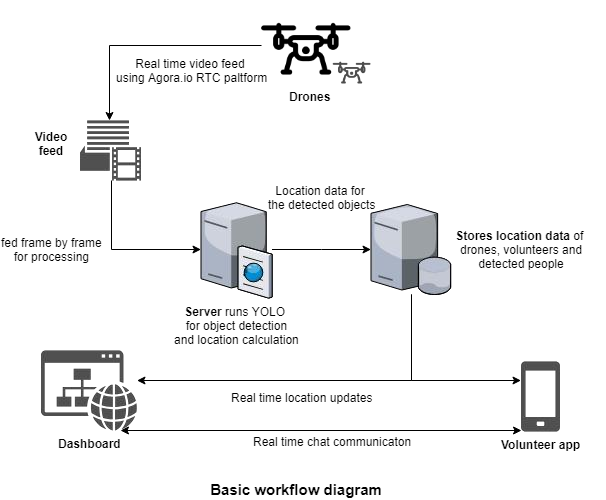

We detect people using a real-time object detection algorithm called YOLO (You Only Look Once). This is one of the most recent advancements in the field of computer vision and allows us to find people and their numbers efficiently and accurately. Since it is computationally expensive, the operation cannot be performed on the drone itself. Rather, we use the Agora Platform to stream the camera feed in real time to our servers so that the algorithm can work on the server. (We ran into a major hurdle during AngelHack here too! More on that later 😀)

We also gather and show data as such:

- Locations of volunteers (using the Volunteer Android app).

- The number of people who are in distress and require help, along with their locations. These data points, we believe, will be invaluable to volunteers who might not know where people need help.

We also implemented a system of chatting where volunteers can chat, which can help with logistics.

The important components of our projects:

- A volunteer app powered by Agora

- Streaming video from the drone through the Agora Android SDK

- A server receiving the stream through the Agora Web SDK

- A central dashboard that monitors all the identified data points

Since we opted to use Android Things, deploying the code in Android (drones simulation) was a breeze. But the server side was a whole other story. Agora’s services greatly reduced our need to write our own video servers and clients for different platforms because Agora had implementations for most platforms. Their services were also easier to implement and had several advantages including:

- Secure communication

- Low latency

- Cross-platform capabilities

This was very important to us since we were using YOLO on the incoming stream and we had decided to leverage Agora’s platform for smooth integration of the video servers into our deep learning algorithms.

The most important part of the project was the use of Android in the drones for getting the live camera feed. This allowed us to port the application to an Android Things kit which could be integrated with the drone. Since we didn’t have a drone at that point we made an Android app which we can later port into a drone. The stream from the Android app was read by the server which then applied the YOLO deep learning algorithm to locate humans in each frame of the video stream. Then a few techniques were applied in order to approximate the physical geospatial location of the people from the video feed. We wrote an algorithm which can estimate the location of an object in an aerial image given the bounding boxes and the coordinates of the drone. It was pretty accurate and took factors like the radius of curvature of the Earth into account. The most challenging part was relaying the video feed to the servers that had these algorithms because during the time of development, there was no Agora Server SDK available.

We tackled the problem in a two-part strategy:

- We strung together a web application that would receive the stream from the Android app and render it in the page using Agora’s Web SDK. This was a normal usage of the web SDK and we deployed it in a static file host.

- In the Python server, we wrote a script that would emulate the browser using Chrome WebDriver and extracted the frames from it. We used the Puppeteer library by Google to accomplish this. Puppeteer gave us a headless chrome instance in which we loaded the previously coded web application and periodically took automated screenshots of the frames and fed them into the deep learning algorithm. All of this was done asynchronously to boost performance.

from pyppeteer import launch from PIL

import Image

import numpy as np

async def main(execpath):

browser = await launch({'executablePath': execpath})

page = await browser.newPage()

await page.goto('http://unsting-relay.netlify.com') # Points to Web SDK

frames_cnt = 0

while(True):

initial = time.time()

img = await page.screenshot()

image = Image.open(io.BytesIO(img)).convert('RGB')

img = np.array(image)

img = np.roll(img, 1, axis=-1)

pred = yolo(img) # We pass each frame to perform yolo

if len(pred) > 0:

img = np.roll(img, 1, axis=-1) view(img, pred)

update_locations(pred)

await browser.close()We were essentially running client side code on the server. Once the frames were loaded into the Python environment, the rest of the process was comparatively straightforward. We integrated our backend system with the dashboard and volunteer app components of our project.

All of our hard work was worth it and there is no doubt that making such a product would have been almost impossible without the platform, infrastructure or the guidance that we received from Agora during the hack. We received good support from Agora via their Slack workspace. They really encouraged us to explore the platform. Agora’s adaptive solution fit our needs and allowed us to create our application. We learned so much from Sid, who explained how Agora is completely customizable in every aspect and allows you to even reduce resolution in order to maintain frame rate in a high-latency network. For our project, we wanted all our frames to be captured even on high latency. The resolution tradeoff was viable for us since YOLO tracks humans pretty well even on low-resolution images. Our end product was a great start for a really cool project idea and has lots of room for improvement and polish. We are currently concentrating our efforts to make this idea into a sustainable reality.

In the end, we want our product to be a complete solution that is able to aid people every step of the way. That is why we are further expanding the project. One of the components we are working on is a blockchain-based supply chain that can be used by organizations to track donations that will go to people desperately in need. This supply chain will ensure that the donations that are given by people are guaranteed to reach the people who need it and remove the possibility of corruption from middlemen. This is especially important in developing countries like India where such cases of corruption are very widespread. We are also building alert mechanisms into our product to allow first responders to react to situations faster. This is done using predictive analysis based on weather and disaster data. By doing some time series analysis, it might be possible to help in disaster prediction, which could be a tremendous help to organizations that are trying to help people that are potentially facing disasters.